What are Modern Data Pipelines?

Understanding ETL and ELT Workflows

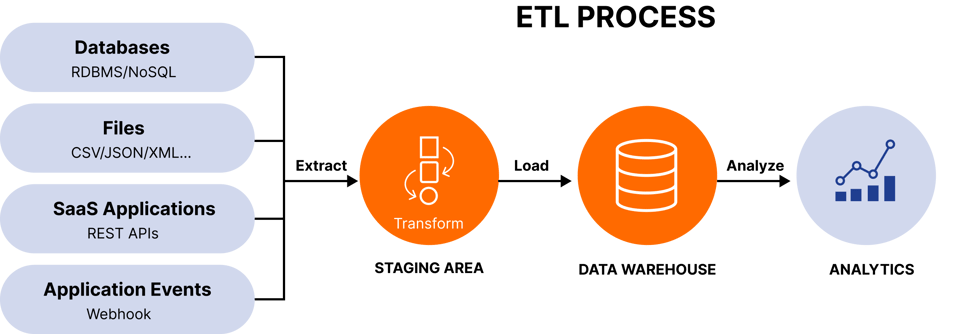

ETL (Extract, Transform, Load)

ELT (Extract, Load, Transform)

.png?width=964&height=426&name=ELT%20(1).png)

ETL vs ELT: Side-by-Side Comparison

| Category | ETL | ELT |

| Definition | Data is extracted, transformed, and then loaded. | Data is extracted, loaded, and then transformed. |

| Transform | Transformed on a separate server before loading. | Transformed inside the destination system. |

| Load | Loads transformed data into the destination system. | Loads raw data into the destination system for later transformation. |

| Speed | Time-intensive due to early transformation. | Faster, as raw data is loaded directly. |

| Data Output | Ideal for structured data. | Supports structured, semi-structured, and unstructured data. |

| Scalability | Suited for smaller datasets with complex transformations. | Optimized for large datasets with simpler transformations. |

| Maintenance | Requires maintenance of a separate transformation server. | Simplified, with fewer systems to maintain. |

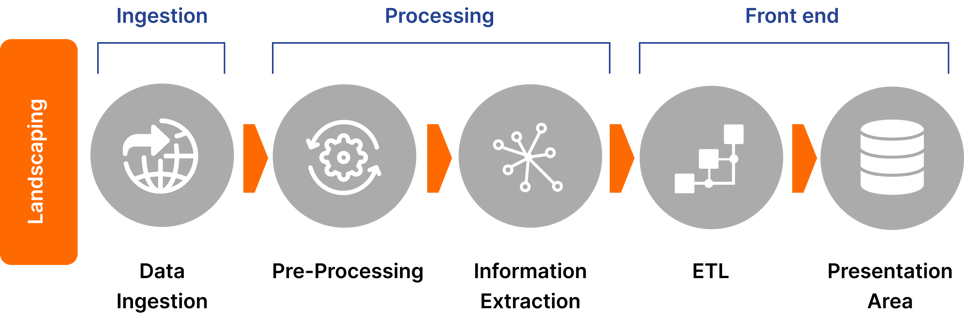

Architecture of Modern Data Pipelines

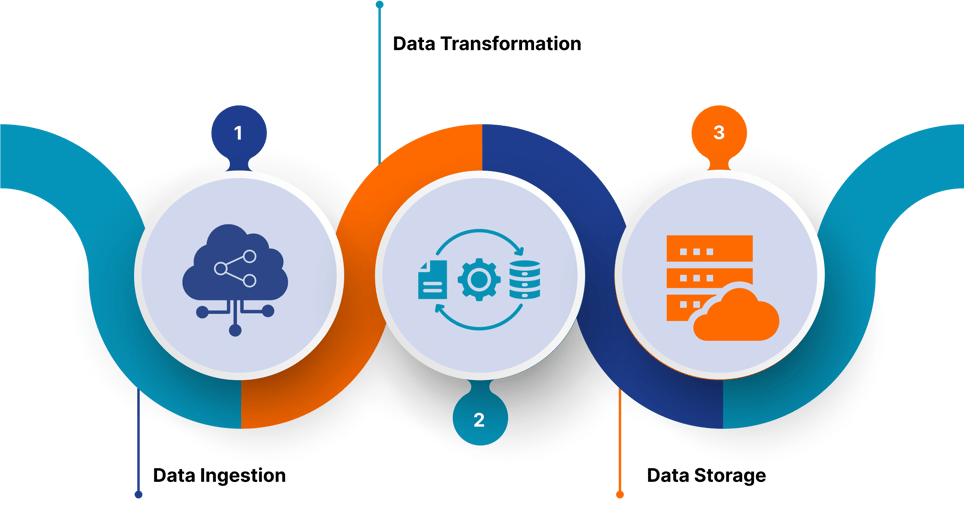

There are three stages for a modern data pipeline:

1. Data Ingestion

Raw data is pulled from various sources. This may include data from SaaS applications, mobile devices, and IoT sensors. It might be either structured or unstructured. It is often stored in the cloud warehouse, such as Amazon Redshift or Azure Synapse, for better flexibility and scalability. Hence, it is updatable and ready to implement real-time processing.

2. Data Transformation

It then undergoes numerous data transformations at ingestion; it cleanses, filters, and enriches the data. Automation comes into play at this stage because aggregating data or converting formats are repetitive processes. This transformation stage is very important for consistency and to prepare the data for analysis.

3. Data Storage

The transformed data is kept in the repository, allowing end users to access it. The processed data is delivered to the subscribers or consumers in a streaming context, making it available for real-time processing or batch processing.

Best Practices for Handling Large-Scale Data Processing

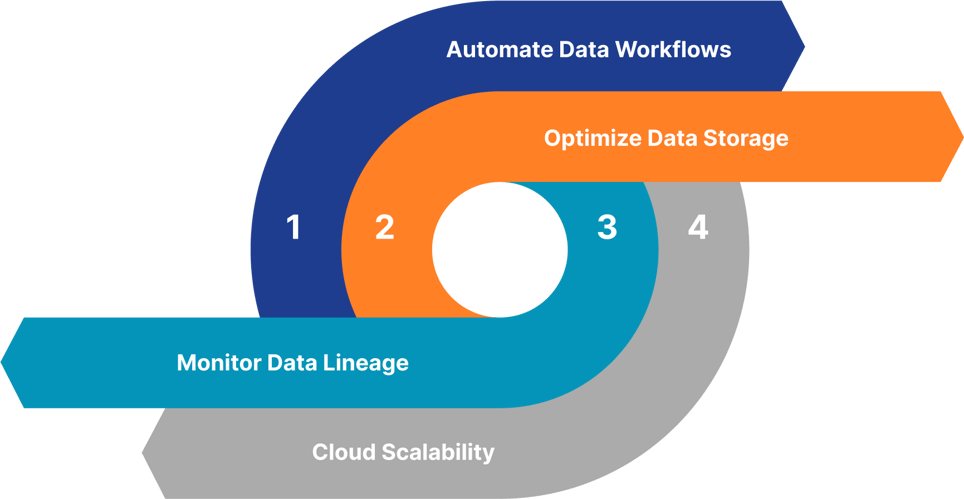

Effectively handling large volumes of data requires implementing best practices to ensure performance, accuracy, and scalability:

Automate Data Workflows

Automate Data Workflows

Automation removes human error and optimizes repetitive data processing tasks. Optimize Data Storage

Optimize Data Storage

Using a combination of data lakes and warehouses to balance the storage needs for structured and unstructured data.

Monitor Data Lineage

Monitor Data Lineage

Understand how data evolves using data lineage. This activity ensures compliance with regulatory requirements. Cloud Scalability

Cloud Scalability

Leverage cloud-native scalable solutions that can be optimized based on performance.

Ensuring Data Quality

Data quality is critical to the success of modern data pipelines. Without clean, accurate data, insights drawn from the analysis may be flawed. To ensure high-quality data:

%201.png?height=70&name=development%20(1)%201.png) Set Up Validation Rules:

Set Up Validation Rules:

Use automated checks to flag and correct inconsistencies during ingestion and transformation.

Embed Data Governance:

Embed Data Governance:

There should be governance frameworks for the entire organization to ensure data is processed safely in accordance with privacy standards such as GDPR or HIPAA.

Monitor in Real Time:

Monitor in Real Time:

This kind of tool manages to track issues in a timely manner so that they can be resolved as soon as possible.

Conclusion

Modern data pipelines are necessary for processing large amounts of business data today. In most situations, the selection of the right data workflow—whether ETL or ELT—can help architects ensure efficiency, scalability, and accuracy in their data systems.

Evermethod, Inc. understands the challenges involved in building and maintaining modern pipelines. We provide tailored solutions that are scalable, secure, and designed to address businesses' exact data demands.

Whether you're dealing with structured, unstructured, or streaming data, Evermethod, Inc.'s expertise ensures that your data pipelines run smoothly, driving actionable insights and improved decision-making.

Streamline your data processes now! Contact Evermethod, Inc. to discover modern pipeline solutions tailored to your company.

Get the latest!

Get actionable strategies to empower your business and market domination

.png?width=882&height=158&name=882x158%20(1).png)

.png/preview.png?t=1721195409615)

%2013.png)